The Dark Side of Design: AI, Algorithmic Nudging, and the Rise of Deceptive Interfaces

- christopherstevens3

- May 23, 2025

- 25 min read

Updated: May 28, 2025

Introduction

In the digital age, the fusion of artificial intelligence (AI) with user experience (UX) design has revolutionized how users interact with technology. While this integration offers enhanced personalization and efficiency, it also introduces ethical concerns. The emergence of AI-enhanced manipulative design techniques has introduced design practices like algorithmic nudging and dark patterns. Once manually implemented, these strategies are increasingly automated, exploiting psychological vulnerabilities. Algorithmic nudging, though less overtly deceptive, leverages behavioral science to steer users toward specific outcomes, now amplified by predictive algorithms and user profiling (Mathur et al., 2019). Dark patterns refer to design choices that subtly coerce or deceive users into actions they might not intend, often benefiting the platform provider (OECD, 2022).

The implications of these design tactics are profound. AI-enhanced manipulative UX can compromise informed consent, dilute individual autonomy, and erode trust in digital services. When users are guided by opaque algorithms that exploit cognitive biases, their ability to make voluntary and informed decisions about personal data is significantly undermined. This raises concerns for data protection and challenges the foundational principles of fairness, transparency, and accountability in ethical AI development. Such practices can transform consent into a performative act, lacking genuine comprehension or voluntariness (Susser et al., 2019; Yeung, 2016).

Recent studies highlight the growing prevalence and sophistication of these manipulative designs. For instance, Zaheer’s (2019) study emphasizes the ethical responsibility of UX designers in shaping transparent, user-centric digital experiences that respect autonomy and informed consent. Moreover, legal and regulatory bodies increasingly recognize the need to address these concerns. The European Union's Digital Services Act (DSA) and the proposed EU Digital Fairness Act aim to curb manipulative online practices, including dark patterns (European Commission, 2025; McGrath, 2024). Similarly, the Federal Trade Commission (FTC) has issued guidelines and taken enforcement actions against deceptive design practices (Lalsinghani, 2024).

This article aims to comprehensively assess the ethical, legal, and regulatory responses to AI-enhanced manipulative design practices across jurisdictions. By analyzing emerging legislative trends, judicial interpretations, and policy recommendations, the article seeks to evaluate the sufficiency of current protections and identify actionable pathways for safeguarding user autonomy and data protection in the face of increasingly intelligent and persuasive digital interfaces. In doing so, it offers critical insights for policymakers, legal scholars, technologists, and human rights advocates navigating this rapidly evolving landscape.

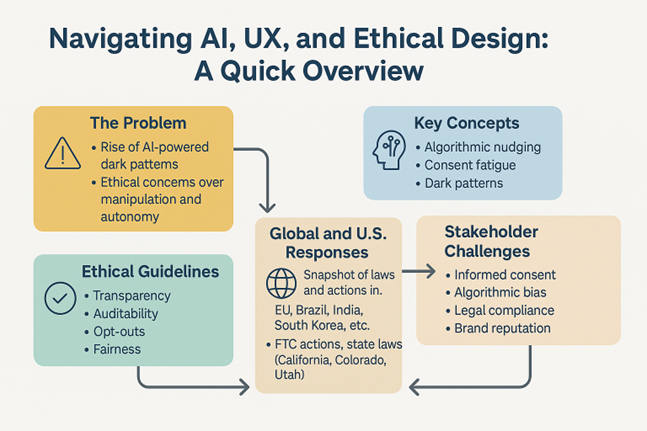

Mapping the Ethics and Regulation of AI-Driven UX Design

Before delving into the detailed analysis of algorithmic nudging, dark patterns, and regulatory responses, this visual roadmap offers a high-level summary of the paper’s core structure. It outlines the ethical challenges introduced by AI-powered interfaces, introduces foundational concepts, and maps global and U.S. legislative efforts. This overview provides a concise entry point for understanding the emerging governance landscape around ethical UX design by situating these developments within broader stakeholder implications and design responsibilities.

Figure 1: Mapping the Ethics and Regulation of AI-Driven UX Design

Key Concepts and Terms

To understand the complexities of AI-driven manipulative design, it is essential to clarify several foundational concepts. These key terms help frame the broader discussion on how AI and UX interact to influence user behavior, consent, and data protection. This infographic introduces foundational terms, which include algorithmic nudging, consent fatigue, and dark patterns. These concepts are critical to understanding how AI influences user autonomy, ethical design, and user experience.

Figure 2. Key Concepts and Terms in AI-Driven UX Design

These concepts collectively provide a framework for evaluating the technological mechanisms and the ethical implications of AI-enhanced UX design. As the article progresses, these terms anchor the analysis of ethical, legal, and regulatory responses to manipulative digital practices.

Defining the Terrain

UX design has evolved from static, rule-based interfaces to dynamic, AI-driven systems capable of real-time behavioral manipulation. Traditional UX strategies employed psychological principles, such as urgency cues and default settings, to subtly influence user decisions. However, the integration of AI has amplified these tactics. It has enabled interfaces to adapt instantaneously based on user interactions, preferences, and emotional states (Romanenko, 2025). The following infographic contrasts traditional UX techniques with AI-enhanced manipulative interfaces, illustrating how personalization and user influence have evolved from manual prompts to real-time algorithmic manipulation.

Figure 3: Evolution from Traditional UX to AI-Enhanced Manipulative Interfaces

AI-powered personalization now extends beyond mere convenience, often venturing into manipulative design. For instance, platforms utilize AI to analyze user behavior and deliver tailored prompts. These activities can nudge users toward specific actions, often without explicit awareness. This hyper-personalization can lead to scenarios where users are guided to make choices that may not align with their best interests. It can also raise ethical concerns about autonomy and informed consent (Joshi, 2025).

In the commercial sector, e-commerce platforms employ AI to present personalized pricing, limited time offers, and checkout processes optimized to increase conversions. These strategies often leverage scarcity tactics and social proof to create a sense of urgency, compelling users to act swiftly (Lagorce, 2024). In healthcare, wellness applications use AI-driven nudges to promote certain behaviors. While potentially beneficial, it can also infringe on user autonomy if not transparently communicated (Chiam et al., 2024). The following scenario provides an example of a company’s use of personalized pricing and urgency tactics to target a consumer:

User Scenario #1: Personalized Pricing and Urgency Tactics: Jenna shops online for wireless headphones. She is shown a message: “Only two of them remain at this price; buy in the next 10 minutes!” A countdown timer ticks ominously. The timer resets when she refreshes the page, but she does not notice. Meanwhile, her friend searches for the same item on another device and sees a lower price, without any sense of urgency. The site used Jenna’s browsing history to inflate pricing and trigger impulse buying. She feels misled, but legally, was this personalization or manipulation?

Moreover, the financial industry is not exempt from these practices. Digital banking services have been observed employing dark pattern design choices that trick users into taking unintended actions. Such tactics include making it difficult to cancel subscriptions or obscure fees. They can compromise user trust, potentially leading to legal and regulatory scrutiny (Kreger & Ablazevica, 2025).

The pervasive nature of AI-enhanced manipulative design underscores the need for robust ethical frameworks, legal reforms, and increased regulatory measures. As AI continues to shape user experiences across various sectors, balancing innovation with respect for user autonomy and privacy is imperative. The subsequent section will explore the global legal and regulatory responses to address these challenges.

Legal and Regulatory Landscape: Global and U.S. Efforts

As AI continues to permeate various aspects of daily life, governments worldwide are grappling with regulating its development and deployment. The rapid integration of AI into sectors such as healthcare, finance, and digital services has raised concerns about autonomy, data protection, and ethical use. Several countries and jurisdictions have enacted or proposed legislation to address these issues, aiming to balance innovation with protecting individual rights and freedoms. This map summarizes recent legislative activity worldwide, highlighting enacted laws, draft proposals, and regulatory guidelines related to AI and dark pattern governance across jurisdictions.

Figure 4: Global Legislation on AI and Dark Patterns

Global Efforts

Brazil: Brazil’s efforts include:

Artificial Intelligence Bill (PL 2338/2023): Establishes AI use guidelines focused on transparency and accountability (Martins, 2025).

Lei Geral de Proteção de Dados (LGPD): Enforced since 2020; aligns with EU GDPR standards. (IAPP, 2020).

China: China’s efforts include:

Interim Measures for the Management of Generative AI Services: Regulates content moderation and data security in generative AI (China Law Translate, 2023).

Personal Information Protection Law (PIPL): Implemented in 2021, the PIPL is China's comprehensive data protection law regulating personal information processing.

European Union (EU): The EU’s efforts include:

Digital Services Act (DSA): Effective February 2024, the DSA aims to create a safer digital space by imposing obligations on digital services to manage content and protect users' rights.

EU AI Act: Adopted in 2023, the AI Act introduces a risk-based framework for AI applications, banning certain high-risk AI practices and setting compliance requirements for others (European Parliament, 2025).

EU General Data Protection Regulation (EU GDPR): Enacted in 2018, the EU GDPR sets a high standard for data protection in the EU, and indirectly globally, influencing global data privacy and protection laws.

India: India’s efforts include:

Draft Digital India Act: A draft framework aiming to regulate emerging technologies, including artificial intelligence, and replace the outdated Information Technology Act, 2000. It is expected to explicitly address dark patterns and algorithmic nudging as part of broader efforts to ensure consumer protection and accountability in digital platforms (Sheikh, 2024).

Guidelines for Prevention and Regulation of Dark Patterns: India's Ministry of Consumer Affairs officially issued these guidelines through the Central Consumer Protection Authority (CCPA). They are grounded in the Consumer Protection Act, 2019. They aim to curb deceptive design practices in digital (Government of India, 2023).

Saudi Arabia: Saudi Arabia’s efforts include:

AI Ethics Principles (2.0): The foundational framework for AI governance in the Kingdom. They apply to all stakeholders involved in the design, development, deployment, implementation, use, or those affected by AI systems within Saudi Arabia (Saudi Data & AI Authority, 2023).

Generative AI Guidelines: These guidelines promote the ethical, safe, and transparent use of generative AI technologies across sectors. They establish key principles, including fairness, accountability, and transparency. The goal is to ensure the responsible development and deployment of AI systems that align with societal values and mitigate potential risks (Baig & Khan, 2024).

Singapore: Singapore’s efforts include:

National AI Strategy (NAIS) 2.0: Launched in 2023, NAIS 2.0 outlines Singapore's approach to AI development, focusing on responsible innovation and public trust (Smart Nation Singapore, 2023).

Personal Data Protection Act (PDPA): Singapore's main data protection law, the PDPA governs the collection, use, and disclosure of personal data by organizations (Personal Data Protection Commission - Singapore, 2021).

South Korea: South Korea’s efforts include:

AI Framework Act: Promulgated in 2025 and effective from January 2026, this risk-based regulation imposes transparency, accountability, and human oversight obligations on high-impact AI systems (Shivhare & Park, 2025).

Amended Electronic Commerce Act: Effective February 2025, this law bans six types of dark patterns in e-commerce (e.g., hidden renewals, cancellation obstruction) (Aix, 2025).

KFTC Dark Pattern Guidelines (2023): Issued by the Korea Fair Trade Commission, these voluntary guidelines classify dark patterns into four main types and nineteen subtypes (Jung et al., 2023).

Personal Information Protection Act – Amended/South Korea (PIPA): Revised in 2023, PIPA enhances user rights regarding automated decision-making, allowing users to request explanations and opt out of such processes (Personal Information Protection Commission, 2023).

Table 1 summarizes the global AI legal and regulatory developments:

Table 1: Global AI Legal and Regulatory Developments

Country/Region | Key Legislation/Initiatives | Focus Areas | Implementation Year |

Brazil | LGPD, AI Bill (PL 2338/2023) | Data protection, AI governance | 2020–2024 |

China | PIPL, Generative AI Measures | Personal data, generative AI regulation | 2021–2023 |

European Union | AI Act, DSA, GDPR | AI risk management, digital services, data protection | 2018–2025 |

India | Dark Patterns Guidelines | Consumer protection, UI/UX design ethics | 2023 |

Saudi Arabia | SDAIA AI Ethics Principles, Generative AI Guidelines | AI ethics, risk classification, transparency | 2023–2024 |

Singapore | NAIS 2.0, PDPA | Responsible AI development, data protection | 2012–2023 |

South Korea | PIPA, Amended E-Commerce Act, KFTC Guidelines, AI Framework Act | Automated decision-making, dark pattern bans, risk-based AI regulation | 2023–2026 |

As nations continue to develop and refine their AI legal and regulatory frameworks, it becomes increasingly important to understand the global landscape of AI governance. The following section will delve into the United States' approach to AI regulation, exploring U.S. federal and U.S. state-level initiatives aimed at addressing the challenges posed by emerging technologies.

U.S. Federal Efforts

As AI and digital services become increasingly embedded in daily life, the United States faces mounting pressure to establish comprehensive federal regulations protecting consumers from manipulative design practices, such as dark patterns. While the U.S. has yet to enact overarching AI legislation, U.S. federal agencies, particularly the FTC, have initiated measures to address deceptive user interfaces and subscription practices.

FTC Initiatives: The following enforcement actions highlight the FTC’s increasing focus on algorithmic nudging and dark patterns:

FTC vs. Amazon Prime Interface: The FTC has filed a lawsuit against Amazon, accusing the company of enrolling consumers into Amazon Prime subscriptions without their consent through manipulative user-interface designs. The complaint also alleges that Amazon made the cancellation process intentionally difficult. The trial is scheduled for June 2025 (Scarcella, 2024). The following scenario is an example of how companies use manipulative user-interface designs:

User Scenario #2: Sam wants to cancel his Amazon Prime subscription. On the site, he clicks “Manage Membership” and is routed through five screens. The “Keep My Benefits” button is large and green. The actual cancel option is small, gray, and worded as “I Still Want to Cancel.” Sam accidentally reactivates his subscription twice. He gives up, believing it’s canceled, only to be charged again next month. This interface design is central to the FTC's dark pattern case against Amazon.

Click-to-Cancel Rule: To combat consumers' challenges in canceling subscriptions, the FTC introduced the "Click-to-Cancel" rule. This rule requires businesses to make the cancellation process as straightforward as the sign-up process. While initially set to take effect in May 2025, enforcement has been delayed to July 14, 2025, to allow businesses additional time to comply (Archie et al., 2024).

DoNotPay AI Legal Claims: In September 2024, the FTC fined DoNotPay $193,000 for deceptive claims about its AI legal services. The FTC found that the service misled users about its functionality and legal oversight (Federal Trade Commission, 2024a).

Epic Games Settlement: In a landmark case, the FTC finalized a $245 million settlement with Epic Games in March 2023. The agency alleged that Epic employed deceptive design tactics in its popular game, Fortnite, leading players to make unintended in-game purchases. The settlement mandated refunds to affected consumers and prohibited Epic from using dark patterns or charging consumers without explicit consent (Federal Trade Commission, 2023b).

US Congressional Oversight and Legislative Developments: Despite these agency-level actions, the U.S. Congress has yet to pass comprehensive and meaningful AI legislation. The Congressional Research Service (CRS) has highlighted significant legal gaps in U.S. federal laws concerning AI and manipulative digital practices. In response, some U.S. congressional lawmakers have proposed a 10-year moratorium on U.S. state-level AI laws to establish a unified U.S. federal framework and to promote increased AI innovation. However, this proposal has faced growing bipartisan criticism for potentially stifling U.S. state innovation and leaving consumers vulnerable without federal protection (Associated Press, 2024; Brown & O’Brien, 2024).

Table 2 summarizes the current planned U.S. federal government actions to address manipulative design practices:

Table 2: U.S. Federal Actions Addressing Manipulative Design Practices

Initiative | Description | Status | Effective Date |

Amazon Prime Litigation | Lawsuit alleging unauthorized Prime enrollments via dark patterns | Ongoing | Trial set for June 2025 |

Click-to-Cancel Rule | Regulation mandating easy subscription cancellations | Enforcement delayed | July 14, 2025 |

Congressional Oversight | CRS reports identifying legal gaps in AI regulation | Ongoing discussions | N/A |

Epic Games Settlement | $245M settlement addressing deceptive in-game purchases in Fortnite | Finalized | March 2023 |

Proposed State Regulation Moratorium | 10-year ban on state-level AI laws to unify federal oversight | Proposed | N/A |

The U.S. federal government's approach to regulating manipulative design practices is evolving, with the FTC leading enforcement actions against deceptive digital interfaces. However, the absence of comprehensive federal legislation and debates over U.S. state-level AI regulatory authority highlight the complexities of establishing a cohesive national framework. As the digital landscape advances, the interplay between U.S. federal initiatives and U.S. state-level legislative actions will be crucial in shaping adequate consumer protection.

U.S. State-Level Legislative Efforts: Despite comprehensive federal legislation regarding AI and manipulative design practices, several U.S. states have proactively enacted laws to address these emerging challenges. These U.S. state-level initiatives aim to protect consumers from deceptive user interfaces, ensure transparency in AI applications, and uphold data privacy standards. They include:

California: California’s efforts include:

Assembly Bill 2905 (AB 2905): AB 2905 requires that any prerecorded message using an artificial voice disclose this fact during the initial interaction, aiming to prevent deception in telemarketing and other communications (Howell & Allen, 2024).

California Consumer Privacy Act (CCPA) as amended by the California Privacy Rights Act (CPRA): The CPRA amends the CCPA to explicitly prohibit the use of "dark patterns" to obtain user consent, defining them as interfaces designed to subvert or impair user autonomy and decision-making (Portner, 2023). The law considers consent obtained through such means invalid (Lichti, 2022; Portner, 2022; Sieber & Carreiro, 2023).

Colorado: Colorado’s efforts include:

Colorado Privacy Act (CPA): The CPA prohibits obtaining consumer consent through dark patterns, defining them as user interfaces that impair user autonomy or decision-making. Consent acquired via such means is deemed invalid (Colorado Attorney General, 2022).

Senate Bill 205 (SB 205): SB 205, also known as the Colorado Artificial Intelligence Act, requires developers and deployers of high-risk AI systems to implement risk management policies, conduct impact assessments, and ensure transparency in AI-driven decisions to protect consumers from algorithmic discrimination (Angle & Marmor, 2024; Colorado General Assembly, 2024; Rodriguez et al., 2024; Tobon & Hansen, 2024 ).

Utah: Utah’s efforts include:

Artificial Intelligence Policy Act: Enacted in March 2024, this law requires entities using generative AI tools to disclose their use to consumers, particularly in regulated occupations. It also limits the ability of entities to disclaim responsibility for AI-generated content that violates consumer protection laws (Levi et al., 2024; McKell & Teuscher, 2024).

Deceptive AI Use Ban: Effective March 2025, this legislation makes it unlawful to knowingly use generative AI to create deceptive content to mislead consumers. It mandates the disclosure of AI use in high-risk interactions or when requested by consumers (Akin et al., 2025).

Table 3 summarizes U.S. State-level AI and manipulative design acts and laws:

Table 3: U.S. State-Level AI and Manipulative Design Regulations

State | Law/Regulation | Key Provisions | Effective Date |

California | CCPA/CPRA | Prohibits dark patterns in obtaining user consent | Jan 1, 2023 |

California | SB 1047 | Requires AI developers to assess risks and undergo annual audits | Jan 1, 2026 |

California | AB 2905 | Mandates the disclosure of AI-generated voices in communications | Jan 1, 2025 |

Colorado | CPA | Invalidates consent obtained through dark patterns | July 1, 2023 |

Colorado | SB 205 | Imposes risk management and transparency requirements on high-risk AI systems | Feb 1, 2026 |

Utah | AI Policy Act | Requires disclosure of generative AI use and limits liability disclaimers | May 1, 2024 |

Utah | Deceptive AI Use Ban | Prohibits the creation of deceptive AI content and mandates disclosure in high-risk interactions | March 27, 2025 |

As U.S. state-level AI laws and regulatory frameworks evolve to combat manipulative digital design, examining how these developments impact users and organizations is essential. Legal provisions, while crucial, must be supported by an understanding of the underlying ethical and operational challenges that AI-enhanced interfaces present. The following section explores the key challenges and risks when UX design leverages algorithmic nudging and personalization without adequate safeguards.

Challenges and Risks to Individuals and Organizations

As AI becomes increasingly integrated into UX design, it brings forth many challenges and risks that impact individuals and organizations. While AI-driven personalization and automation offer enhanced user engagement and operational efficiency, they pose significant concerns about user autonomy, regulatory compliance, ethical standards, and brand reputation. Understanding these challenges is crucial for stakeholders to responsibly navigate the complex landscape of AI-enhanced digital environments. These challenges and risks include:

Compromised User Choice and Informed Consent: AI systems often operate as "black boxes," making decisions that users do not interpret easily. This opacity can undermine informed consent, as users may not fully understand how their data is collected, processed, or utilized. For instance, AI-driven personalization can lead to behavioral manipulation, where users are nudged towards decisions that may not align with their best interests without explicit awareness (Gruver, 2024). Such practices challenge the ethical principle of respecting user autonomy and highlight the need for transparency in AI systems.

Ethical Concerns in UX Testing and Personalization: Integrating AI into UX research and personalization strategies introduces ethical dilemmas, particularly concerning bias, privacy, and accountability. AI algorithms trained on biased data can perpetuate inequalities, leading to unfair user experiences. Additionally, the extensive data collection required for personalization raises privacy concerns, especially if users are unaware of how their data is used. Ethical UX design necessitates implementing measures to ensure fairness, transparency, and user control (Fard, 2025).

Regulatory Noncompliance and Invalid Data Collection: The deployment of AI in UX design raises significant regulatory concerns, particularly regarding data privacy and protection. Noncompliance with AI and data protection laws, such as the EU AI Act and the EU GDPR, can result in substantial legal and regulatory fines and penalties. Moreover, AI systems that collect and process data without valid consent may render the data collection process invalid, leading to further legal complications (Perforce, 2022; Tucker, 2024). Organizations must ensure their AI systems adhere to existing AI laws and regulations to mitigate these risks.

Reputational Damage: Organizations employing AI in their digital interfaces risk reputational harm if these systems lead to negative user experiences or ethical breaches. AI-induced errors, such as biased decision-making or data breaches, can erode public trust and damage a brand's image. Moreover, the lack of transparency in AI operations can lead to user skepticism and reluctance to engage with AI-driven services. Maintaining ethical standards and transparency in AI applications is essential to preserving organizational reputation (Holweg et al., 2022).

Table 4 summarizes the following challenges and risks associated with integrating AI into UX design:

Table 4: Challenges and Risks in AI-Enhanced UX Design

Challenge/Risk | Description | Impacted Stakeholders |

Compromised User Choice and Informed Consent | AI systems may influence user decisions without explicit consent, undermining autonomy. | Users, Regulatory Bodies |

Ethical Concerns in UX Testing and Personalization | AI-driven personalization may perpetuate biases and infringe on user privacy, raising ethical issues. | Users, UX Designers, Researchers |

Regulatory Noncompliance and Invalid Data Collection | Failure to adhere to data protection laws can result in legal penalties and invalid data practices. | Organizations, Legal Entities |

Reputational Damage | Adverse outcomes from AI applications can erode public trust and damage brand reputation. | Organizations, Marketing Teams |

Addressing these challenges requires a multifaceted approach, including implementing transparent AI systems, ensuring compliance with data protection regulations, conducting ethical UX research, and maintaining open communication with users. By proactively managing these risks, organizations can harness the benefits of AI while safeguarding user rights and organizational integrity.

Enforcement in Practice: Case Studies and Regulatory Actions

As digital platforms increasingly integrate AI and sophisticated user interface (UI) designs, regulators worldwide are intensifying efforts to combat manipulative practices known as "dark patterns." These deceptive design strategies can compromise user autonomy, leading to unintended subscriptions, unauthorized purchases, and the inadvertent sharing of personal data. This section examines notable enforcement actions and regulatory responses, highlighting the global commitment to safeguarding consumer rights in the digital age.

Additional Case Studies on Dark Pattern Enforcement: Global legal and regulatory agencies have taken several enforcement actions against organizations using dark patterns. Their enforcement actions include:

CNIL Cookie Consent (France): In December 2024, France's CNIL issued formal notices to websites found using dark patterns in their cookie banners. These designs made rejecting cookies more difficult than accepting them, violating the EU GDPR (CNIL, 2024).

Swedish DPA Cookie Enforcement (Sweden): In April 2025, the Swedish Data Protection Authority warned firms using misleading cookie banners that did not offer genuine opt-out options, contravening the EU GDPR (Petrova, 2025; Pimentel, 2025).

These cases illustrate the growing momentum among international regulators to confront deceptive interface design. As scrutiny intensifies, digital service providers must prioritize ethical UX design and regulatory compliance to maintain consumer trust.

Algorithmic Nudging vs. Manipulation: Ethical Distinctions

In the digital age, UI designs significantly influence individual choices. Techniques like algorithmic nudging can guide users toward beneficial behaviors without restricting freedom. However, when these nudges become manipulative, they risk infringing on user autonomy. This section delves into the ethical boundaries between acceptable nudging and coercive manipulation, offering principles for responsible interface personalization.

Nudging, rooted in behavioral economics, involves subtly guiding choices without eliminating options. Ethical nudges maintain transparency and respect user autonomy. However, when nudges become opaque or exploit cognitive biases, they verge on manipulation. For instance, default settings that favor data sharing without clear disclosure can undermine informed consent. The ethical acceptability of a nudge hinges on its transparency, the user's ability to opt out, and its alignment with the user's best interests (Liew, 2023; Team Design, 2024; Zaytseva, 2024).

Principles for Responsible Interface Personalization

As digital platforms increasingly leverage behavioral science and AI to personalize user experiences, the line between helpful guidance and coercive influence can blur. While algorithmic nudging is often presented as a tool for enhancing user engagement and well-being, it can easily cross into manipulation when transparency and voluntariness are compromised. To explore this tension, consider the following user experience scenario, which challenges the boundaries of ethical design in AI-enhanced personalization.

User Scenario #3: Ethical or Manipulative UX? Lily signs up for a wellness app. Onboarding includes a “limited-time deal” banner offering personalized coaching. A message reads, “Join 5,000 new users today, exclusive to you!” The only opt-out is a tiny gray link at the bottom. She feels pressured but unsure if the offer is tailored or a generic upsell tactic. Is this an ethical nudge toward wellness, or an exploitative design? The answer depends on transparency, context, and user agency, which are core principles of responsible UX personalization.

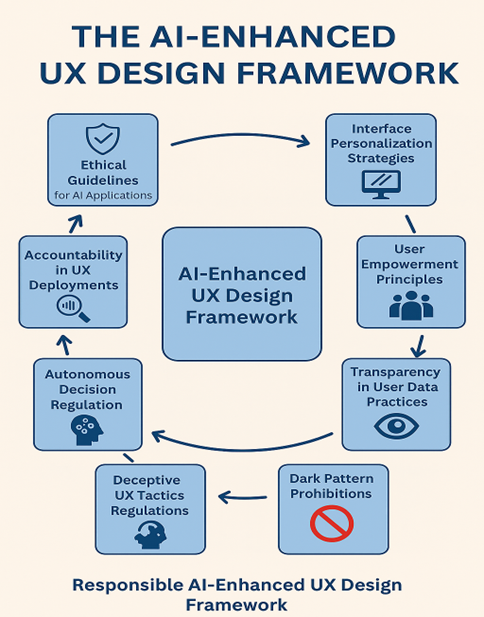

To ensure ethical and legally compliant personalization, interface designers and developers should adhere to a comprehensive set of principles rooted in user rights, fairness, and transparency.

Accountability & Auditability: Maintain detailed logs and documentation of personalization processes to support audits and ensure transparency with regulators and users (Intersoft Consulting, 2016a; International Standardization Organization, 2019).

Context-Aware Consent: Align consent prompts with contextual expectations using adaptive notices tailored to user environments (Nissenbaum, 2010; OECD AI Principles, 2024c).

Design for Vulnerability: Address the needs of minors, elderly users, and other vulnerable groups to ensure interfaces are not manipulative (UK ICO, 2020; World Economic Forum, 2021).

Fairness & Non-Discrimination: Audit personalization algorithms to prevent biased outputs or discriminatory impacts (European Commission, 2019; Future of Privacy Forum, 2023; OECD, 2013).

Informed Consent: Seek explicit, informed, and freely given consent, avoiding dark patterns like pre-ticked boxes (Intersoft Consulting, 2016b; Mathur et al., 2019; UK ICO, 2025a).

Purpose Limitation: Use data only for the stated purposes at the time of collection to avoid "function creep" (Intersoft Consulting, 2016a; UK ICO, 2025b).

Right to Explanation: Provide understandable explanations for significant automated decisions in personalization (Intersoft Consulting, 2016c; Wachter et al., 2017).

Transparency: Inform users about data practices, logic of personalization algorithms, and the scope of data use (Intersoft Consulting, 2016d; UK ICO, 2023b).

User Control & Autonomy: Give users meaningful choices to opt out, customize, or limit personalization (Caltrider & MacDonald, 2024; Rosala, 2020).

This circular framework outlines nine interlinked principles, from ethical guidelines and user empowerment to dark pattern prohibitions. They support transparency, fairness, and accountability in AI-driven interface personalization.

Figure 5. Responsible AI-Enhanced UX Design Framework

To assist designers and compliance officers in applying these principles in practice, the following decision tree helps assess whether a UX design choice aligns with ethical personalization standards or risks crossing into manipulation.

Figure 6: Decision Tree (Is This UX Practice Ethical or Manipulative?)

Future Disruptions in UX Ethics

As digital ecosystems continue to evolve, new technologies such as autonomous AI agents, immersive metaverse platforms, and multimodal voice interfaces are poised to introduce novel forms of user manipulation. These environments allow for persistent, hyper-personalized interactions where consent boundaries and decision-making contexts are even more fluid. AI agents may act on a user’s behalf without complete transparency, while metaverse UX could blend attention engineering with biometric data collection, making manipulation harder to detect and regulate. Anticipating these shifts is critical for future-proofing ethical design standards and ensuring AI governance legal and regulatory frameworks remain adaptive in the face of immersive and agent-driven technologies.

As AI technologies evolve, new interaction models may intensify the risks of manipulative design.

The infographic below highlights three anticipated technological evolutions that could significantly complicate the ethical regulation of user experience (Gruver, 2024; Joshi, 2025; Liew, 2023).

Figure 7: Anticipated Technological Evolutions

Conclusion

In a digital world increasingly shaped by algorithmic decisions and adaptive interfaces, regulating dark patterns has become a focal point of global data governance. Governments, regulators, and civil society recognize the harm of manipulative design and converge toward shared definitions, standards, and accountability mechanisms. We must consider synthesizing cross-border insights, emphasizing the need for proactive design ethics, enforcing transparency, and harmonizing AI governance globally.

Cross-Border Convergence on Dark Pattern Regulation: Over the past two years, jurisdictions worldwide, from California and Colorado to the EU, India, South Korea, and Brazil, have issued laws and guidance targeting deceptive interface design. While the legal language and scope may differ, the foundational principles are consistent: design must be honest, consent must be meaningful, and user autonomy must be preserved. This convergence signals the emergence of a normative consensus around dark patterns as a breach of fairness and transparency.

Proactive Design and AI Transparency as Compliance Priorities: The shift from reactive enforcement to proactive governance is clear. Regulators increasingly expect companies to build transparency and fairness into their systems by design. This means offering straightforward UI designs and UX systems explanations and maintaining opt-out pathways as defaults. As AI becomes more context-aware and personalized, interface transparency will no longer be a competitive edge but a compliance imperative.

Future Direction: Standardization and Regulatory Alignment: Looking ahead, cross-border regulatory alignment is poised to become a significant area of focus. The proposed EU Digital Fairness Act and initiatives by the International Consumer Protection and Enforcement Network all aim to codify ethical UX design into enforceable standards.

Final Reflection: The battle against dark patterns is not merely a legal or technical issue; it is a test of how we value digital dignity in the age of AI. As the line between personalization and manipulation blurs, the question is no longer whether we can design interfaces to shape behavior, but whether we should. Ethical UX governance is not only about avoiding fines; it is about sustaining trust, protecting rights, and designing a digital future that serves people, not just platforms. In the end, the interfaces we build are moral choices made visible.

Key Questions for Stakeholders

To move from reflection to action, stakeholders across the tech ecosystem must interrogate their design practices, governance models, and compliance strategies. The table below outlines strategic questions that key stakeholders should consider:

Stakeholder Group | Guiding Questions |

Civil Society & Advocacy Groups | Are public awareness efforts sufficient to empower digital citizens? How can we support open design audits or participatory governance in tech? |

Corporate Leadership | How is ethical design embedded into KPIs and governance structures? Are we prepared for cross-border audits or enforcement actions? |

Policymakers | Are legal frameworks keeping pace with adaptive UX and algorithmic nudging? What oversight tools are needed to detect and prevent manipulative designs? |

Product Designers & UX Teams | Are personalization features aligned with user intent and not driven by conversion-only metrics? Are opt-out options accessible and meaningfully designed? |

Regulatory Authorities | Do impact assessment tools adequately evaluate interface risks? Do our consent flows meet global standards on user comprehension and voluntariness? |

These questions foster ethical introspection and serve as a roadmap for operationalizing transparency, fairness, and compliance. Building humane and rights-respecting digital experiences is a collective responsibility, and one we must continue to ask tough questions to fulfill.

References

1. Aix. (2025, February 21). Korea’s new e-commerce law and its impact on free trials and dark patterns. Aix Post. https://aixpost.com/growth-insight/korea-ecommerce-law-2025/

2. Akin, Gump, Strauss, Hauer, & Feld. (2025, March 27). Utah creates consumer protections from the deceptive use of generative AI. https://www.akingump.com/en/insights/ai-law-and-regulation-tracker/utah-creates-consumer-protections-from-the-deceptive-use-of-generative-ai

3. Angle, K., & Marmor, R. (2024, May 16). Colorado legislature approves AI bill targeting “high-risk” systems and AI labeling. Holland & Knight. https://www.hklaw.com/en/insights/publications/2024/05/colorado-legislature-approves-ai-bill-targeting-highrisk-systems-and

4. Archie, J.C., Boynton, M.R., Kim, A., Mahmood, G., & Rubin, M.H. (2025, May 13). FTC delays enforcement of Click-to-Cancel Rule until July 14, 2025. Latham & Watkins. https://www.lw.com/en/insights/ftc-delays-enforcement-of-click-to-cancel-rule-until-july-14-2025

5. Associated Press. (2024). Lawmakers criticize proposal to block state AI laws. https://apnews.com/article/39d1c8a0758ffe0242283bb82f66d51a

6. Betts, J.G., Ochs, D., & Bell, M.H. (2024, May 20). Colorado’s Artificial Intelligence Act: What employers need to know. Ogletree Deakins. https://ogletree.com/insights-resources/blog-posts/colorados-artificial-intelligence-act-what-employers-need-to-know/

7. Caltrider, J., & MacDonald, Z. (2024, July 18). How to protect your privacy from ChatGPT and other AI chatbots. Mozilla – Privacy Not Included. https://www.mozillafoundation.org/en/privacynotincluded/articles/how-to-protect-your-privacy-from-chatgpt-and-other-ai-chatbots/

Chiam, J., Lim, A., Nott, C., Mark, N., Teredesai, A., & Shinde, S. (2024, February 9). Co-Pilot for health: Personalized algorithmic AI nudging to improve health outcomes. arXiv. https://arxiv.org/abs/2401.10816

China Law Translate. (2023, July 13). Interim Measures for the Management of Generative Artificial Intelligence Services. https://www.chinalawtranslate.com/en/generative-ai-interim/

10. CNIL. (2024, December 12). Dark patterns in cookie banners: CNIL issues formal notice to website publishers. https://www.cnil.fr/en/dark-patterns-cookie-banners-cnil-issues-formal-notice-website-publishers

Colorado Office of the Attorney General. (2022, September 29). Colorado Privacy Act Rules: 4 CCR 904-3. Colorado Department of Law. https://coag.gov/app/uploads/2022/10/CPA_Final-Draft-Rules-9.29.22.pdf

European Commission. (2025, February 12). Digital Services Act package. https://digital-strategy.ec.europa.eu/en/policies/digital-services-act-package

European Commission. (2019, April 8). Ethics guidelines for trustworthy AI. https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai

European Parliament. (2025, February 19). EU AI Act: First regulation on artificial intelligence. https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence?utm_source=chatgpt.com

Fard, A. (2025). Ethical considerations when applying AI in UX research. AdamFard. https://adamfard.com/blog/ethical-ai-in-ux-research

Federal Trade Commission. (2025a). FTC finalizes order DoNotPay that prohibits deceptive ‘AI Lawyer’ claims, imposes monetary relief, and requires notice to past subscribers. https://www.ftc.gov/news-events/news/press-releases/2025/02/ftc-finalizes-order-donotpay-prohibits-deceptive-ai-lawyer-claims-imposes-monetary-relief-requires

Federal Trade Commission. (2023b). FTC finalizes order requiring Fortnite-maker Epic Games to pay $245 million for tricking users into making unwanted charges. https://www.ftc.gov/news-events/news/press-releases/2023/03/ftc-finalizes-order-requiring-fortnite-maker-epic-games-pay-245-million-tricking-users-making

Future of Privacy Forum. (2023, September). Best practices for AI and workplace assessment technologies. https://fpf.org/wp-content/uploads/2024/02/FPF-Best-Practices-for-AI-and-WA-Tech-FINAL-with-date.pdf

Government of India. (2023). The guidelines for prevention and regulation of dark patterns. Department of Consumer Affairs. https://consumeraffairs.nic.in/theconsumerprotection/guidelines-prevention-and-regulation-dark-patterns-2023

Griffin, J. (2025). Dark Patterns in 2025: Predictions and Practices for Ethical Design. Medium. https://medium.com/design-bootcamp/dark-patterns-in-2025-predictions-and-practices-for-ethical-design-cbd1a5db8d80

Gruver, J. (2024, September 4). Navigating the ethical frontier: AI in personalizing user experiences. Medium. https://medium.com/design-bootcamp/navigating-the-ethical-frontier-ai-in-personalizing-user-experiences-71eff285bf82

Holweg, M., Younger, R., & Wen, Y. (2022). The reputational risks of AI. California Management Review. https://cmr.berkeley.edu/2022/01/the-reputational-risks-of-ai/

Howell, C.T., & Allen, L.B.R. (2024, October 4). Decoding California’s recent flurry of AI laws. Foley & Lardner. https://www.foley.com/insights/publications/2024/10/decoding-california-recent-ai-laws/

IAPP. (2020, October). Brazil General Data Protection Law (LGPD, English translation). https://iapp.org/resources/article/brazilian-data-protection-law-lgpd-english-translation/

International Standardization Organization. (2019). ISO/IEC 27701:2019 – Security techniques – Extension to ISO/IEC 27001 and ISO/IEC 27002 for privacy information management – Requirements and guidelines. https://www.iso.org/standard/71670.html

Intersoft Consulting. (2016a). EU GDPR: Article 5: Principles relating processing of personal data. https://gdpr-info.eu/art-5-gdpr/

Intersoft Consulting. (2016b). EU GDPR: Article 7: Conditions of consent. https://gdpr-info.eu/art-7-gdpr/

Intersoft Consulting. (2016c). EU GDPR: Article 22: Automated individual decision-making, including profiling. https://gdpr-info.eu/art-22-gdpr/

Intersoft Consulting. (2016d). EU GDPR: Article 13: Information to be provided where personal data are collected from the data subject. https://gdpr-info.eu/art-13-gdpr/

Intersoft Consulting. (2016e). EU GDPR: Article 14: Information to be provided where personal data have not been collected from the data subject. https://gdpr-info.eu/art-14-gdpr/

Joshi, N. (2025, February 4). AI-driven UX & hyper-personalization in 2025. Medium. https://medium.com/@cast_shadow/ai-driven-ux-hyper-personalization-the-future-of-ux-in-2025-b82c8e544f24

32. Jung, Y., Park, S.H., Shin, L., Kim, G.O., Hyun, J.W., & Paik, S.Y. (2023, August 22). KFTC issues guidelines on self-management of dark patterns. Kim & Chang. https://www.kimchang.com/en/insights/detail.kc?sch_section=4&idx=27878#:~:text=On%20July%2031%2C%202023%2C%20the,in%20their%20online%20user%20interfaces

33. Kreger, A., & Ablazevica, A. (2025). Dark patterns in digital banking compromise financial brands. UXDA Blog. https://theuxda.com/blog/dark-patterns-in-digital-banking-compromise-financial-brands

34. Lagorce, N. (2024, September 16). Six 'dark patterns' used to manipulate you when shopping online. Organization for Economic Cooperation and Development. https://www.oecd.org/en/blogs/2024/09/six-dark-patterns-used-to-manipulate-you-when-shopping-online.html

Lalsinghani, G. (2024). Left in the dark: Evaluating the FTC's limitations in combating dark patterns. Berkeley Technology Law Journal, 39(4), 1463-1490. https://btlj.org/wp-content/uploads/2025/02/0007_39-4_Lalsinghani.pdf

Law, D. (2024, December 17). Brazilian Senate approves artificial intelligence regulation. Daniel. https://www.daniel-ip.com/en/blog/brazilian-senate-approves-artificial-intelligence-regulation/

Levi, S.D., Ridgeway, W.E., Simon, D.A., Slawe, M.C., & Oh, A. (2024, April 5). Utah becomes the first state to enact AI-centric consumer protection law. Skadden. https://www.skadden.com/insights/publications/2024/04/utah-becomes-first-state

Lichti, S. (2022, August 26). Combating dark patterns: A look at CPRA guidelines. Transcend. https://transcend.io/blog/dark-patterns-cpra

Liew, R. (2023, September 13). Ethical marketing: Definition, principles, and examples. Ahrefsblog. https://ahrefs.com/blog/ethical-marketing/

Mathur, A., Acar, G., Friedman, M. G., Lucherini, E., Mayer, J., Chetty, M., & Narayanan, A. (2019). Dark patterns at scale: Findings from a crawl of 11K shopping websites. Proceedings of the ACM on Human-Computer Interaction, 3(CSCW), 1-32. https://doi.org/10.1145/3359183

Martins, L. (2025, February 4). Brazil’s AI law faces uncertain future as big tech warms up to Trump. Tech Policy. https://www.techpolicy.press/brazils-ai-law-faces-uncertain-future-as-big-tech-warms-to-trump/

42. McGrath, M. (2024, September 17). What is the Digital Fairness Act? European Commission. https://www.digital-fairness-act.com/

43. McKell, M.K., & Teuscher, J.D. (2024). S.B. 194: Social media regulation amendments. Utah State Legislature. https://le.utah.gov/~2024/bills/static/SB0194.html

44. Nissenbaum, H. (2010). Privacy in context: Technology, policy, and the integrity of social life. ResearchGate. https://www.researchgate.net/publication/41024630_Privacy_in_Context_Technology_Policy_and_the_Integrity_of_Social_Life

45. OECD. (2024a). OECD Blog. https://www.oecd.org/en/blogs/2024/09/six-dark-patterns-used-to-manipulate-you-when-shopping-online.html

46. OECD. (2024b). Digital. https://www.oecd.org/en/topics/digital.html

47. OECD. (2024c). AI principles. https://www.oecd.org/en/topics/sub-issues/ai-principles.html

48. OECD. (2022, October 26). Dark commercial patterns. https://www.oecd.org/en/publications/dark-commercial-patterns_44f5e846-en.html

49. OECD. (2013). Privacy guidelines. https://www.oecd.org/en/about/data-protection.html

50. Perforce. (2022, December 1). The 4 biggest data privacy risks of non-compliance with regulations. https://www.perforce.com/blog/pdx/data-privacy-risks

51. Personal Data Protection Commission – Singapore. (2021, February 1). Personal Data Protection Act Overview. https://www.pdpc.gov.sg/overview-of-pdpa/the-legislation/personal-data-protection-act

52. Personal Information Protection Act—Amended/South Korea (PIPA). (2023, September 15). Amended Personal Information Protection Act (PIPA) and its enforcement decree become effective. Personal Information Protection Commission – South Korea. https://pipc.go.kr/eng/user/ltn/new/noticeDetail.do?bbsId=BBSMSTR_000000000%20001&nttId=2331

53. Petrova, M. (2025, May 7). Sweden warns major companies over cookie banner failures. Avoid these common GDPR mistakes. Consentmo. https://www.consentmo.com/blog-posts/sweden-warns-major-companies-over-cookie-banner-failures-avoid-these-common-gdpr-mistakes

54. Pimentel, I. (2025, February 5). Swedish DPA targets dark patterns in cookie banners: Is your website compliant? Cookie Information. https://cookieinformation.com/resources/blog/blog-swedish-dpa-imy-dark-patterns-april-2025/

55. Rodriguez, R., Titone, B., & Rutinel, M. (2024). Consumer protections for artificial intelligence. SB24-205: Colorado General Assembly. https://leg.colorado.gov/bills/sb24-205

56. Romanenko, N. (2025, May 8). How AI transforms UX design. Medium. https://medium.com/ux-ui-design-diaries-outsoft/how-ai-transforms-ux-design-64303cc0c569

57. Rosala, M. (2020, November 29). User control and freedom (Usability Heuristic #3). NN/g. https://www.nngroup.com/articles/user-control-and-freedom/

58. Saudi Data & AI Authority. (2023, September). AI ethics principles (1.0). https://sdaia.gov.sa/en/SDAIA/about/Documents/ai-principles.pdf

59. Scarcella, M. (2024, June 12). FTC lawsuit over Amazon Prime’s program set for June 2025 trial. Reuters. https://www.reuters.com/legal/litigation/ftc-lawsuit-over-amazons-prime-program-set-june-2025-trial-2024-06-12/

60. Seiber, E., & Carreiro, P.M. (2024. September 5). California Privacy Protection Agency issues dark patterns enforcement advisory. Carlton Fields. https://www.carltonfields.com/insights/publications/2024/california-privacy-protection-agency-issues-dark-pattern-enforcement-advisory

61. Sheikh, A. (2024, April 23). Transparency must be a cornerstone of the Digital India Act. Tech Policy Press. https://www.techpolicy.press/transparency-must-be-a-cornerstone-of-the-digital-india-act/

62. Shivhare, S., & Park, K.B. (2025, April 18). South Korea’s new AI framework act: A balancing act between innovation and regulation. Future of Privacy Forum. https://fpf.org/blog/south-koreas-new-ai-framework-act-a-balancing-act-between-innovation-and-regulation/

63. Smart Nation Singapore – 2.0. (2023). Singapore National AI Strategy 2.0. https://www.smartnation.gov.sg/nais/

Susser, D., Roessler, B., & Nissenbaum, H. (2019). Online manipulation: Hidden influences in a digital world. Georgetown Law Technology Review, 4(1), 1-45. https://georgetownlawtechreview.org/online-manipulation-hidden-influences-in-a-digital-world/GLTR-01-2020/

65. Tobon, C., & Hansen, J. (2024, May 21). Colorado enacts artificial intelligence law. Shook, Hardy, & Bacon. https://www.shb.com/intelligence/client-alerts/pds/may-2024-tobon-hansen-colorado-ai-law

Tucker, T. (2024, July 29). Application planning: Why is data privacy so important in the planning stage? Perforce. https://www.perforce.com/blog/pdx/application-planning

67. UK ICO. (2025a). Consent. https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/lawful-basis/a-guide-to-lawful-basis/consent/

68. UK ICO. (2025b). What privacy information should we provide? https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/individual-rights/the-right-to-be-informed/what-privacy-information-should-we-provide/#purpose

69. UK ICO. (2023, March 15). Guidance on AI and data protection. https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/artificial-intelligence/guidance-on-ai-and-data-protection/

70. UK ICO. (2020). Age appropriate design: A code of practice for online services. https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/childrens-information/childrens-code-guidance-and-resources/age-appropriate-design-a-code-of-practice-for-online-services/

Wachter, S., Mittelstadt, B., & Floridi, L. (2017, June 3). Why a right to explanation of automated decision-making does not exist in the General Data Protection Regulation. International Data Privacy Law, 7(2), 76-99. https://academic.oup.com/idpl/article-abstract/7/2/76/3860948?redirectedFrom=fulltext&login=false

White & Case. (2025, March 31). AI watch: Global regulatory tracker – China. https://www.whitecase.com/insight-our-thinking/ai-watch-global-regulatory-tracker-china

World Economic Forum. (2021, September 3). Designing artificial intelligence technologies for older adults. https://www.weforum.org/publications/designing-artificial-intelligence-technologies-for-older-adults/

Yavorsky, S., & Schroder, C. (2020, August 19). AI update: EU-High Level Expert Group publishes requirements for trustworthy AI and European Commission unveils plans for AI regulation. Orrick. https://www.orrick.com/en/Insights/2020/08/AI-Update-EU-HighLevel-Expert-Group-Publishes-Requirements-for-Trustworthy-AI-and-European-Commissi

Yeung, K. (2016, May 22). 'Hypernudge': Big Data as a mode of regulation by design. Information, Communication & Society, 20(1), 118–136. https://doi.org/10.1080/1369118X.2016.1186713

Zaheer, S. (2019, October). Ethical UX design: Preventing manipulative interfaces and promoting user trust. International Journal of Engineering Technology Research & Management, 3(10). https://www.researchgate.net/publication/391019688_Ethical_UX_Design_Preventing_Manipulative_Interfaces_and_Promoting_User_Trust

Zaytseva, D. (2024, September 26). The ethics of personalization in UX: Where do we draw the line? Medium. https://medium.com/design-bootcamp/the-ethics-of-personalization-in-ux-where-do-we-draw-the-line-9bad1ab6cbda

Comments